I have always maintained a private bucket list. I have not had the courage to actually put it down in writing – but this year I decided that it is time. My good friend Brent Ozar has been doing this for a few years now, and his list is my inspiration. He has the link to the original post that inspired him too. My goals for the next 15 years are as below. I put it in 3 categories – 2017, this year, 2018 next, and then long term, after.

2017:

1 Complete Microsoft Data Science Program and Diploma in Healthcare Analytics from UCDavis.

2 Stick to blogging goals – one blog post per week, one contribution to sqlservercentral.com per two weeks.

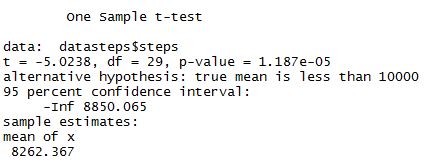

3 Keep up exercise goals of 10,00 steps per day and one yoga workout per week.

4 Speak at local user group as often as I can (my limitations with travel do not allow me to speak at too many sql saturdays or out of town events)

5 Submit to speak at PASS speaker idol event.

6 Hike the Grand Canyon with my sister, we are travelling companions and love to see places together.

7 See two new countries atleast – Mexico with SQL Cruise, one more towards end of the year – remains undecided for now. But two countries it is.

8 Blog on books read so that I can understand the time I devote to reading and range I read in that time.

9 Get home renovation work done – am undecided on if I want to keep this condo or sell it, but either way, I’d have to get work done on it. Best if it got done this year, but involves considerable financial commitment that am not sure I can meet. As of now it looks doable for this year, but may move to next year if I have to reset goals.

10 Increase collection of annotated classics by year end. This is an ongoing goal to build a library for retirement. The only books I buy in print are annotated ones or those with pictures. There are not many of those and my collection is upto 30-40% of what I need already. I keep adding to it @3-4 books a year.

10 Take a course on cartooning and short story writing – both of these are my pet hobbies and never had as much time for them as I’d like – this year would like to atleast take a course on each to deepen my love and interest.

2018:

1 Submit to speak at PASS Summit.

2 Organize SQL Saturday #10 at Louisville (not clear how different this will be yet…).

3 Keep up same goals for exercising.

4 Visit one new country with my sister – am looking at Bali/Indonesia now.

5 Visit one more new country on SQLCruise, hopefully, or on my own. Either way, I do it.

6 Biggie – Pay off my mortgage. Yes, this is important and am not that far away. The only thing that keeps me from it is a bit undecided on how long I can live here with job opportunites being what they are. But I will assume those will be the same and in that case, the house will be ready to be paid off in 2018.

7 Do actual analytics work – by this time I will have a reasonable understanding of R/SAS/Microsoft Data science related skills, and expect it to take me to the next level professionally.

Long term goals:

1 Be a consultant on analytics – right now am looking at healthcare analytics but the area of application may change depending on how my career pans out..but in my 60s I hope to have knowledge and expertise to be a consultant, hopefully without a lot of travel,make the kind of $$$ I want to make and only work the hours I want to.

2 Be respected in SQL/Data community for both knowledge and community work.

3 Attend weddings of my favorite niece and nephew – it has been 25+ years since I attended an indian wedding. It goes back to days when I attended the wedding of a very wealthy classmate/friend, got treated without much respect, and vowed to never set foot in another wedding again. And I have not. But I have two youngsters whose weddings I’d like to attend and break that vow – I will not name them, I do not want them to feel pressure around getting married (which is already enormous in indian culture) – but if they choose to and have a wedding, I’d love to attend and offer my love and blessings.

4 Buy a home by the beach in my native Chennai, where I want to retire. Honestly, this definitely looks like the most difficult one to pull off. Real estate costs a lot of $$$ and homes by the beach are even more expensive. Also for a lot of NRIs this depends on how well the USD holds up against other currencies. But I will put it there and see what fate deals me and what I can do to make this happen.

5 Travel..travel…travel..this list is so enormously long that I don’t know where to start..but I will list the top 12 countries I want to visit in this lifetime.

1 Bali-Indonesia – for the temples, culture, food and scenic beauty – I’ve read about Bali and always wanted to visit, but never found time.

2 Thailand-Singapore-Malaysia – group these together as they are close and can be done in one trip. Singapore – for the sheer inspiration of the world’s safest, successful economy, Thailand and Malaysia – buddhist temples and culture.

3 Tulip/Flower festival – Holland – am a flower buff and very enamored by Holland’s flower festival for years. Am looking at a National Geographic Tour for this but may look at other cheaper options too.

4 Belgium – for Tintin and chocolate. Enough said.

5 Norway – for the wonderful fjords and scenic beauty.

6 UK – cultural connections and so many places to see. I think I’d need a month in London alone.

7 Revisit Italy and Spain with my sister. So love these two countries – in particular Italy, for the food and the history. I love showing off what I’ve already seen to family members, and she is my first pick to show off :))

8 Australia/New Zealand – no reason to not go, and I have family members there.

9 Costa Rica/Panama Canal – hopefully this will happen soon.

10 Switzerland – scenic beauty and chocolate, again.

11 Austria – Sound of Music Country, not possible to not visit.

12 Antartica – last but not the least, ice laden continent would be absolutely a great item to get off bucket list.

Aside from these places, I’d love to see as many national parks in USA as possible, and and many many places in India – too many to list. I am getting a national geographic map with pins to put on my wall next year, and hopefully when am done there won’t be too many unpinned places on it. I look forward to updating this list as I go along each year and see where I get with it.

Thank you, Brent Ozar and Steve Jones, for your inspiration.